C/C++ Toolchain usage on Windows from scratch with just PowerShell

Even if you use a build system, you should be aware of what the build system does for you

TLDR: Jump to Dependencies and Overview headers.

I will be providing all code step by step with documentation for related tools used.

I’ll be assuming that the reader has some basic understanding of a compiler and linker along with shell scripting. If you have not I recommend watching some handmade hero and reading msdn for powershell. I’ll only be doing a basic overview.

My development environment is Windows. I’m only peripherally versed in Unix-like environments. The content should be translatable to that operating environment as well however.

Headspace

C/C++ is one of those platforms that is known for having an abstruse toolchain interface to get anything done pragmatically.

If your a C/C++ developer on windows, you most likely got your start on Visual Studio. Letting it’s IDE handle most of the grime about to be covered in this post.

I’ve used Scons, Meson, CMake build systems as well ( CMake arguably is more dumpster fire than its worth ). They deal with the toolchain and give you a high-level scripting language to work with instead.

Build systems worth looking into but I have no experience with :

cbuild : Define your build using a C/C++ program.

zig build : Use zig as the build configuration platform.

My current choice if I have to setup one for automated production would be Meson. It does the job reliably and is less clunky than Scons.

Handmade

When I watched Casey’s video explaining how to setup a windows build, I was exposed to the grimey toolchain being handled by all these build systems.

But the price I’ve experienced from the handmade community is the ability to keep it simple and fast.

This makes iteration and making a tailored build config for a project good if two principles are followed.

Don’t over-generalize it, write each configuration you need for the target platform OS for the specific codebase.

Make the areas of the code you will rewrite well documented and easy to access.

Something I’ll be discussing more in future posts is, in the world of coding or software development, we have produced a culture divorced from the reality of doing what’s actually required to produce software productively.

Our software to build software is not excused from this. This post will show some notions of what building for C/C++ really requires from the language platform’s user perspective. With the reader hopefully getting a more grounded idea of what they actually need for their current or future projects.

Dependencies

We need a language platform vendor and shell to command the CLI toolchain the vendor exposed to the user.

LLVM : Alternative to Visual Studio and my preferred for compile errors.

clang & clang++ ( not using clang-cl ) : C/C++ compilers.

lld-link : Linker.

scoop install llvmor

winget install -e --id LLVM.LLVM Warning : I'm not sure if winget sets up paths... see: issue on github. For this post, I’ll be assuming that all tools are exposed to the shell’s paths by default.

Powershell 7.2 or later : Unless something like cbuild is being done, you need a shell env to glue this mess of a system together.

Either scoop or winget have it if not on windows 11.

Visual Studio : Its required. Comes with most of the stuff we need.

MSVC : Microsoft’s compiler

Link : Microsoft’s linker

Installer : https://visualstudio.microsoft.com/downloads/

Overview

The whole point of a build for C/C++ is to just get a translation unit ( files ) compiled into “object” specification to then send over for processing to the linker.

For legacy reasons, these tools are all CLI based, without any config files that can be sent over to specify everything needed for a project in one go. Instead for every unit file ( *.cpp ) and every ‘set of’ *.obj files must be commanded with the flags redundantly sent ( in most cases ) for the project.

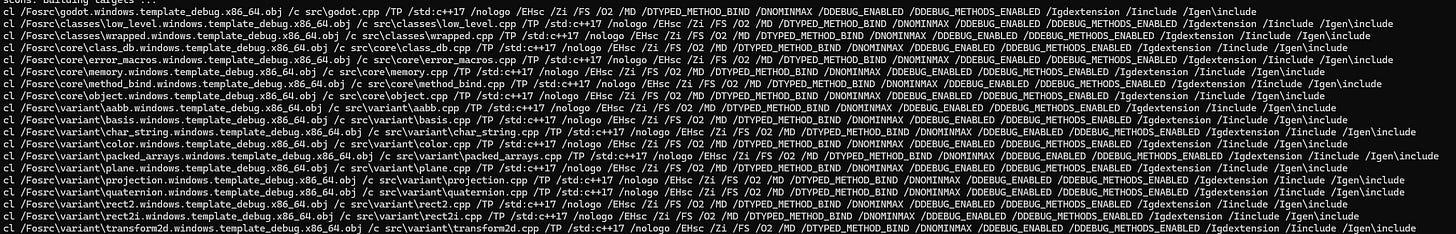

Example : godot’s C++ GDExtension build spamming all the flags:

Note: There are ways to hide those flags, the CLI compiler output doesn’t have to be a text wall.

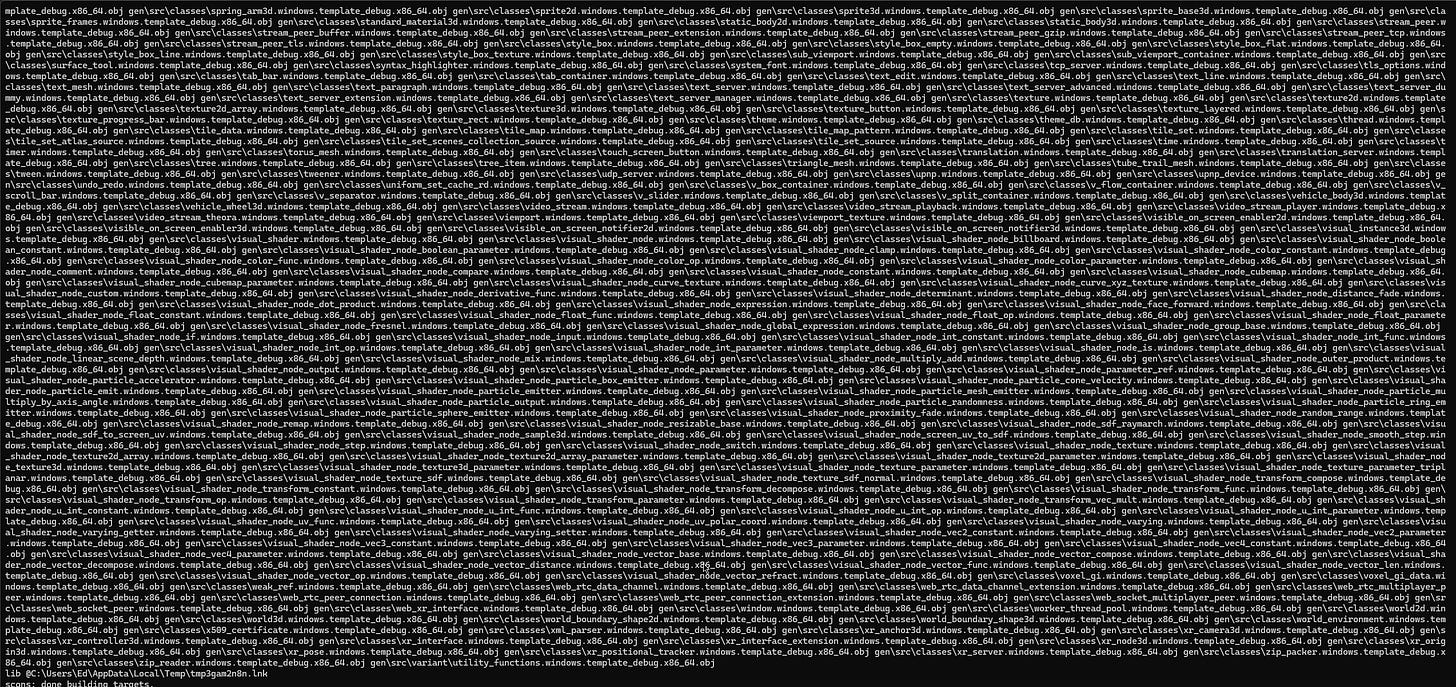

Thankfully for linking this could be avoided by combining all object files into the executable binary if the project does need hot-reloading or some sort of “moduled” separation, however this can lead to an excessively long link-time for large projects if abused ( because the linker is for the most part single-threaded ):

Anyways; You specify the the compiler all the contextual info required to compile a unit ( .cpp ) file into its output ( .object file ). That file which has either almost-ready or ready machine/assembly instructions that can be processed to the final formatted binary during linking.

Project / Codebase cpp files → compiler processing → object files → linker processing → binary files ( dynamic libraries , or executables )

There is a separate process for static libraries ( lib for msvc, llvm-ar for llvm ). But essentially its:

object files → object archiver/zipper → static library

which can then be used as follows:

object files + static libraries + dynamic libraries → linker processing → binary files

For the purposes of this breakdown we’ll ignore static libraries. If you understand this blog you should be able to gather the resources to get that working.

All the fancy build systems we deal with in C/C++ are just package and dependency management on top, along with trying to abstract away insane gotchas; the default inference language vendor toolchains use are for archaic practices. This configuration has been largely set in stone for them, and they will never change most likely.

You still need a robust build system for automating large pipelines of multiple dependencies or modules in a large project. But if your project is developed by a team of less than ~25 people ( mileage may vary ), with a high degree of curation for dependencies, then you can bypass with just scripting in the shell.

What the script will look like

While writing this, I’ve been working on a project called gencpp its a ( relatively ) small library which will be the example use case.

It was originally organized as follows:

Import script helpers :

devshell.ps1, target_arch.psm1( Used for clang to get the target triplet automatically )Process arguments : Any dynamics for the build process ( debug builds, which compile if multiple supported, selectively build only specific aspects, etc )

Resolve all general directory contexts

Building

Setup environment with all external paths that can be resolved with minimal hard-coding.

Setup all flags relevant to the project for the compiler, linker, archiver, all the tools.

Any context specific directory contexts resolved.

For each unit file with a shared set of flags:

Populate include paths

Populate compiler arguments for all relevant unit files.

Run the compiler for all unit files

Populate linker arguments for all relevant object files per binary

Run the linker for all binaries

Rinse and repeat until all modules, stages, are complete.

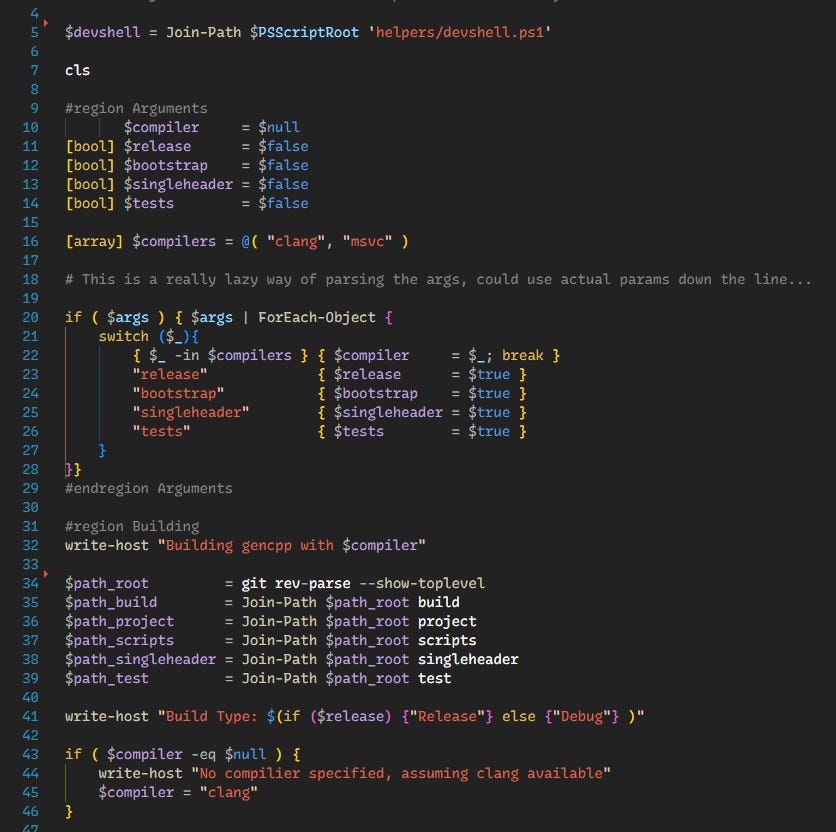

Steps 1-3 : Basic stuff

Are fairly simple, in the example below I made a very lazy argument parser that allows me to just type and argument without needing a value pair.

The command:

git rev-parse --show-toplevelIs quite useful if the project is managed by a git repository.

For other VCSs you can use:

Perforce:

p4 client -o | grep "^Root:" | awk '{print $2}'

Plastic SCM:

cm pwk

SVN:

svn info --show-item wc-root

From here I push onto the shell location stack the root directory for the project. This gives an objective default reference for any future commands incase the script was located in a separate directory ( as mine is ).

Push-Location $path_root

# Rest of the code here for the rest of the script...

Pop-Location # path_root4. Building

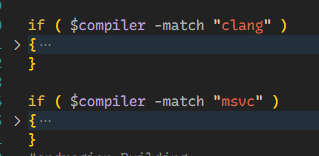

If the script is being used as a sort of all-in-one solution for building across different compilers or worse, between operating systems, just wrap the different implementations from here on out in simple conditionals:

These conditionals can either: call using Invoke-Expression, a platform-specific script, or just have the code inlined. If the build doesn’t do much, such as having just a singular unit build; Placing the code inline will be easiest.

Env Path

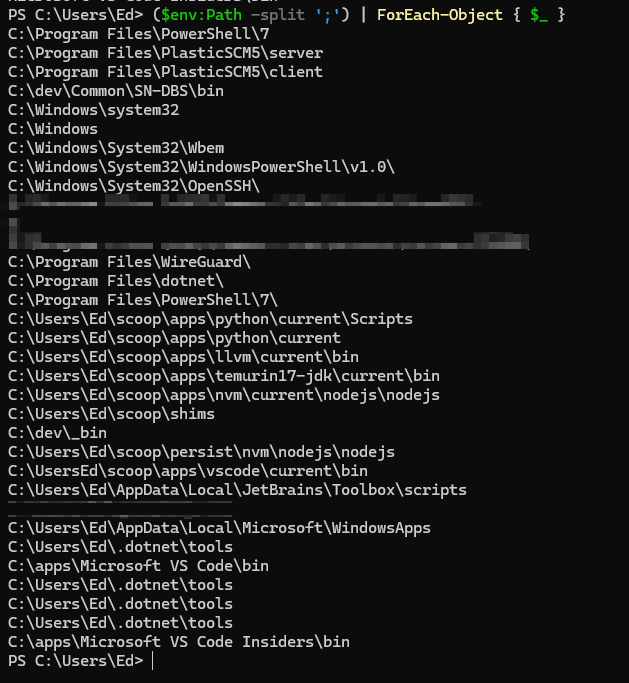

Normally the exposed paths to powershell do not include all development tools for C/C++:

This is done ( afaik ) to keep the paths exposed minimal instead of flooding the env paths with a bunch of development tooling ( such as your usual linux server ) which can easily lead to dependency hell. The practice of, constantly assuming some global path to a dependency always exists, is partially the reason containerization of software exists…

To setup the build environment with the relevant dependencies we need to launch either the the developer command prompt or for the purpose of this post: the developer powershell.

Normally people either launch this script within an existing terminal via some sort of drag-and-drop or historial tab-complete habit. In our case we’ll be making a script to auto-find the most relevant version on the machine and launch it:

| $ErrorActionPreference = "Stop" | |

| # Use vswhere to find the latest Visual Studio installation | |

| $vswhere_out = & "C:\Program Files (x86)\Microsoft Visual Studio\Installer\vswhere.exe" -latest -property installationPath | |

| if ($null -eq $vswhere_out) { | |

| Write-Host "ERROR: Visual Studio installation not found" | |

| exit 1 | |

| } | |

| # Find Launch-VsDevShell.ps1 in the Visual Studio installation | |

| $vs_path = $vswhere_out | |

| $vs_devshell = Join-Path $vs_path "\Common7\Tools\Launch-VsDevShell.ps1" | |

| if ( -not (Test-Path $vs_devshell) ) { | |

| Write-Host "ERROR: Launch-VsDevShell.ps1 not found in Visual Studio installation" | |

| Write-Host Tested path: $vs_devshell | |

| exit 1 | |

| } | |

| # Launch the Visual Studio Developer Shell | |

| Push-Location | |

| & $vs_devshell @args | |

| Pop-Location |

$devshell = Join-Path $path_to 'devshell.ps1'

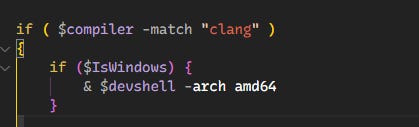

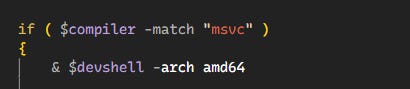

& $devshell -arch amd64I’ll be placing this right after the compiler check, with a windows wrap for clang, and on msvc just executing it immediately:

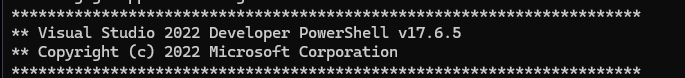

You’ll be greeting with a header prompt indicating the shell has properly loaded:

And if you now check the $env:PATH :

All windows runtime env dependencies should now be resolved.

If you desires to not use the MSVC developer environments, for something more unix like, just run everything within cygwin, mingw, or WSL.

CLI Flags, the knobs for the toolchain

After the environment is squared away, I recommend creating constants for all the flags you’ll be using. Here are the ones I’ve used:

# https://clang.llvm.org/docs/ClangCommandLineReference.html

$flag_compile = '-c'

$flag_debug = '-g'

$flag_debug_codeview = '-gcodeview'

$flag_define = '-D'

$flag_include = '-I'

$flag_library = '-l'

$flag_library_path = '-L'

$flag_link_win = '-Wl,'

$flag_link_win_subsystem_console = '/SUBSYSTEM:CONSOLE'

$flag_link_win_machine_32 = '/MACHINE:X86'

$flag_link_win_machine_64 = '/MACHINE:X64'

$flag_link_win_debug = '/DEBUG'

$flag_link_win_pdb = '/PDB:'

$flag_no_optimization = '-O0'

$flag_path_output = '-o'

$flag_preprocess_non_intergrated = '-no-integrated-cpp'

$flag_profiling_debug = '-fdebug-info-for-profiling'

$flag_target_arch = '-target'

$flag_wall = '-Wall'

$flag_warning = '-W'

$flag_warning_as_error = '-Werror'

$flag_win_nologo = '/nologo'MSVC:

# https://learn.microsoft.com/en-us/cpp/build/reference/compiler-options-listed-by-category?view=msvc-170

$flag_compile = '/c'

$flag_debug = '/Zi'

$flag_define = '/D'

$flag_include = '/I'

$flag_full_src_path = '/FC'

$flag_nologo = '/nologo'

$flag_dll = '/LD'

$flag_dll_debug = '/LDd'

$flag_linker = '/link'

$flag_link_machine_32 = '/MACHINE:X86'

$flag_link_machine_64 = '/MACHINE:X64'

$flag_link_path_output = '/OUT:'

$flag_link_rt_dll = '/MD'

$flag_link_rt_dll_debug = '/MDd'

$flag_link_rt_static = '/MT'

$flag_link_rt_static_debug = '/MTd'

$flag_link_subsystem_console = '/SUBSYSTEM:CONSOLE'

$flag_link_subsystem_windows = '/SUBSYSTEM:WINDOWS'

$flag_out_name = '/OUT:'

$flag_path_interm = '/Fo'

$flag_path_debug = '/Fd'

$flag_path_output = '/Fe'

$flag_preprocess_conform = '/Zc:preprocessor'This simple aliasing, believe it or not, will resolve most of the headaches when dealing with the toolchain. Just making these constants alone. If you name them with similar pattern across platforms, the code below will minimally change.

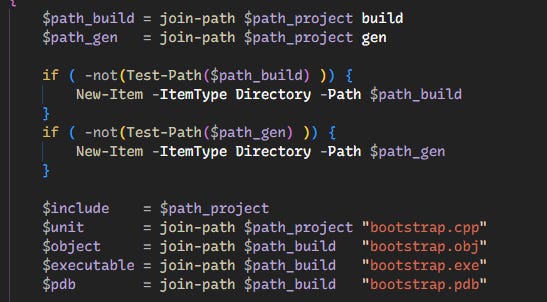

Next we want to resolve any specific paths for the build target desired if the script has multiple for the same platform:

Make sure to also create directories for the generated content. In this case I make two: build and gen with the project folder. Build will hold the compiler & linker output, gen will contain generated code done when running the bootstrap program.

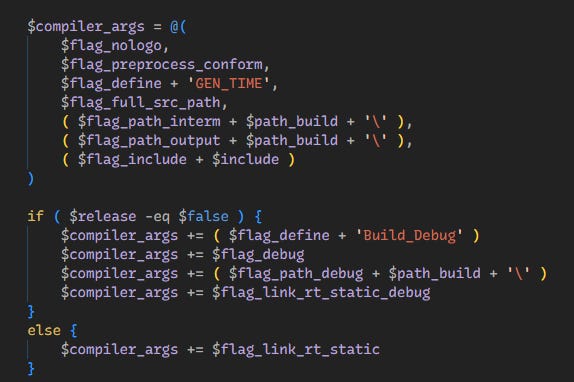

Populating the compiler arguments:

This is highly dependent on the compiler. Clang is nice and offers clang-cl as a compatibility interface with traditional msvc arguments. We won’t be using it to simulate the differences you’ll find if you leave windows to another OS environment.

With powershell, building up the argument list, is just that, an list done with an array container. This eases some of the difficulty but there is still some annoyances to deal with.

Compiler arguments decided that the flag for defines should be glued to the define identifier right after:

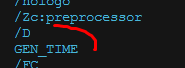

Instead of \D GEN_TIME its \DGEN_TIMEThis occurs as well with other path flags. You’ll see in the above screenshot me use the following syntax pattern to enforce this in powershell:

( <concatenated> + <strings> + <with '+' operator> )This has to be done to prevent the array from not properly gluing the strings together and then you get this in the compiler output:

While its not required, the unit file should be put at either the end or the beginning of the arg-list so it’s easy to spot when printed.

$compiler_args += $flag_compile, $unit

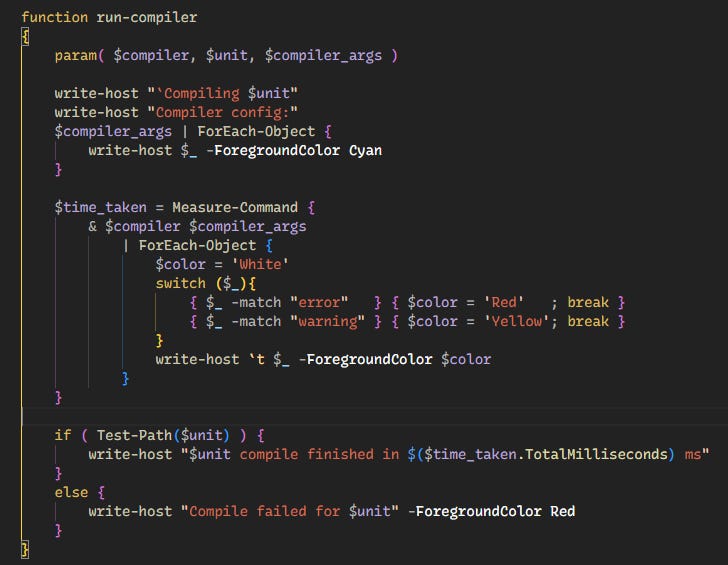

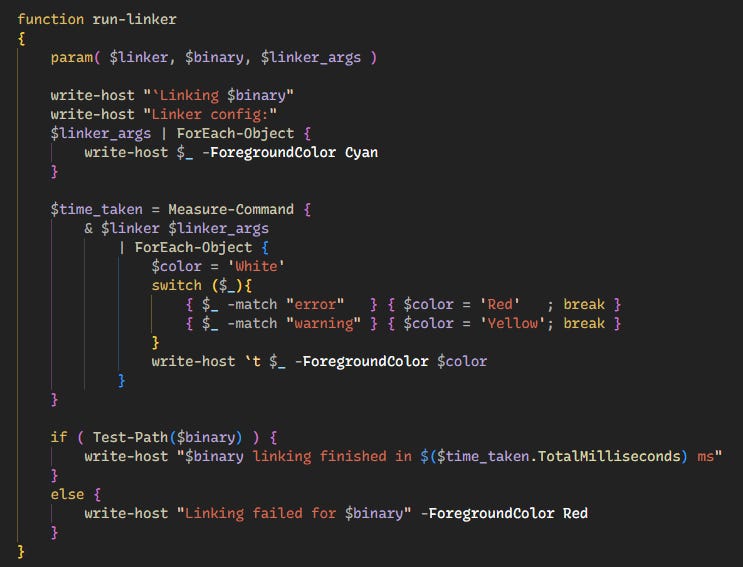

run-compiler cl $unit $compiler_argsWhen all args are in I immediately run the compiler using a helper function:

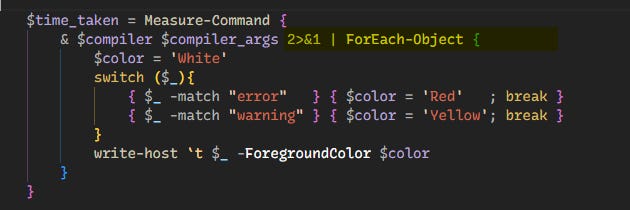

It benches time and attempts to add debug colors if possible ( with clang it does not but thankfully clang has a flag for that ).

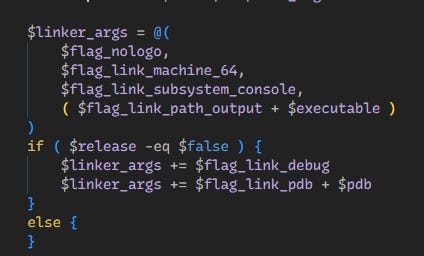

Next comes the linker arguments:

Also straightforward since all the variables indicate their purpose quite clearly.

Running is exactly what you think it’s like:

$linker_args += $object

run-linker link $executable $linker_argsJust include the object file/s targeted at the end and let it rip with the helper function:

( Which is exactly like run-compiler except it has linker logs )

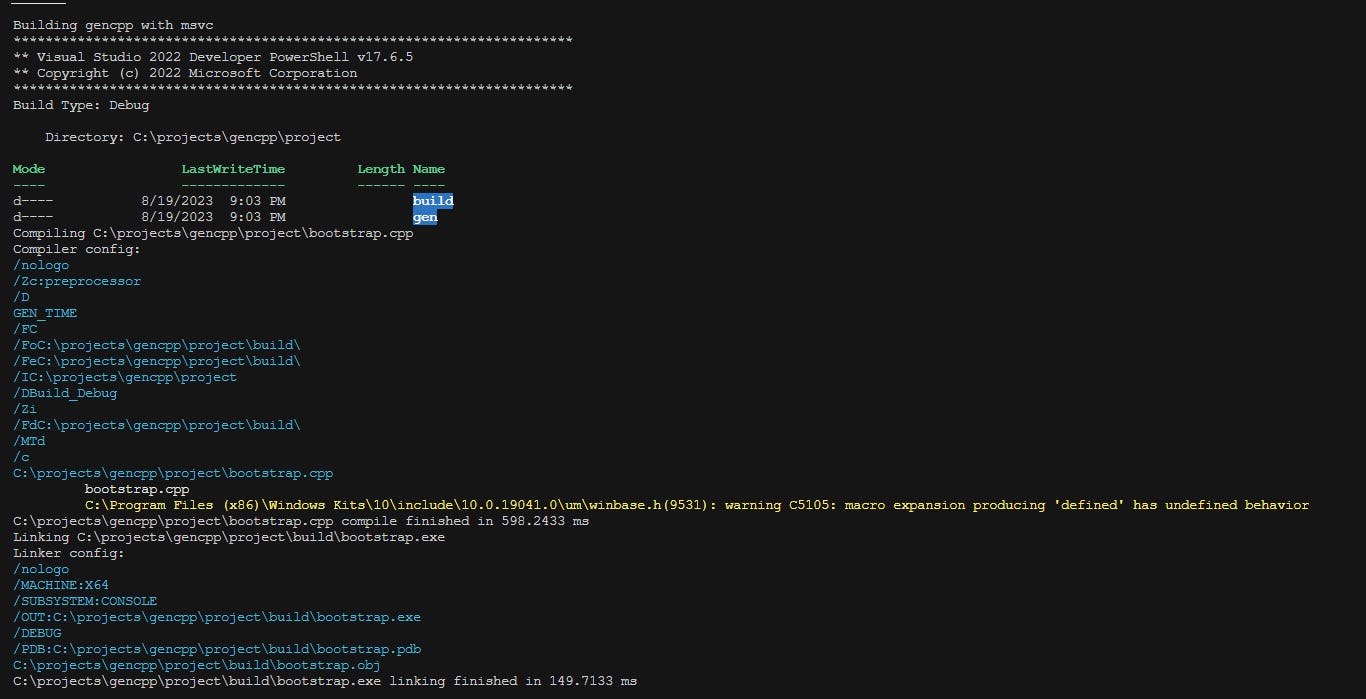

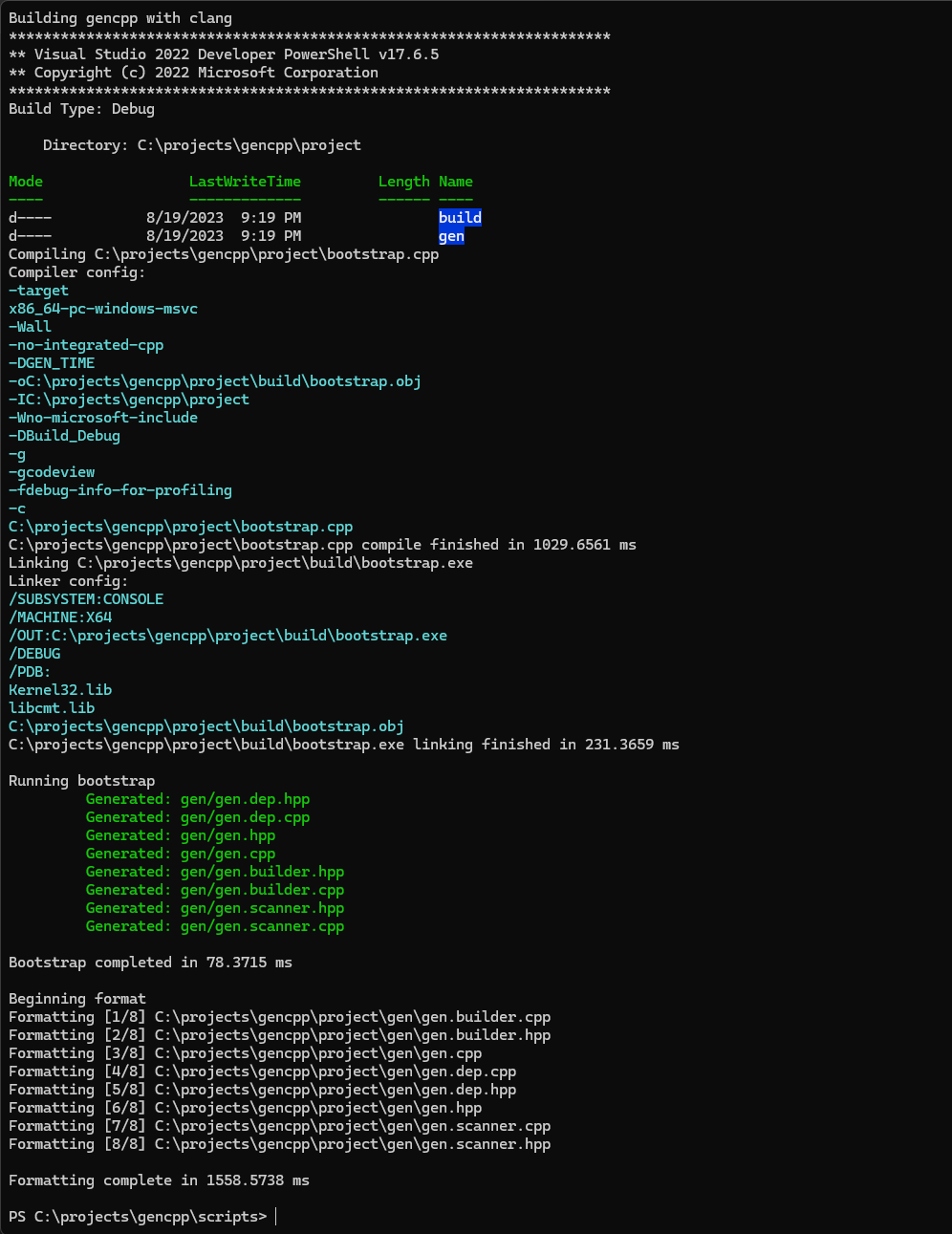

If all goes well something like the following should appear in your build script:

And that’s it for msvc. From here I have additional stages in the script for running the generator and formatting the resulting files.

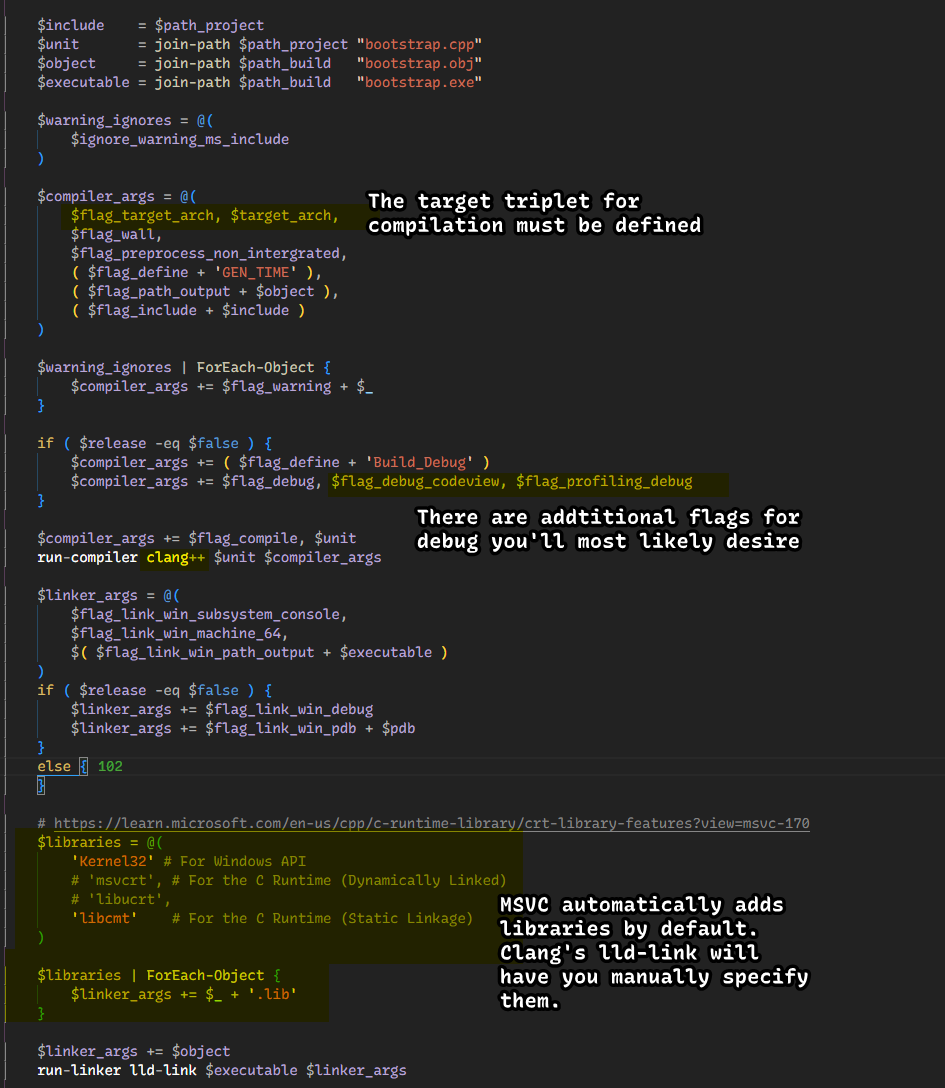

For clang, it’s pretty much the same deal with just a few minor changes:

The result of the build will be the following:

And that’s pretty much it! I hope this was at least useful at clearing up the basics.

Bonus stuff

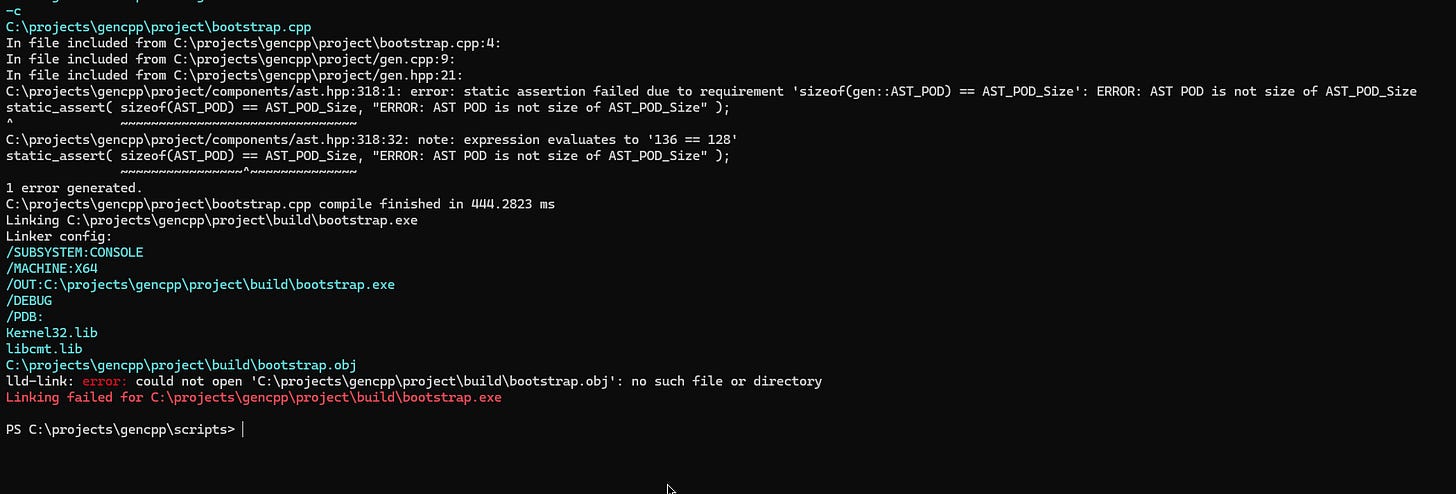

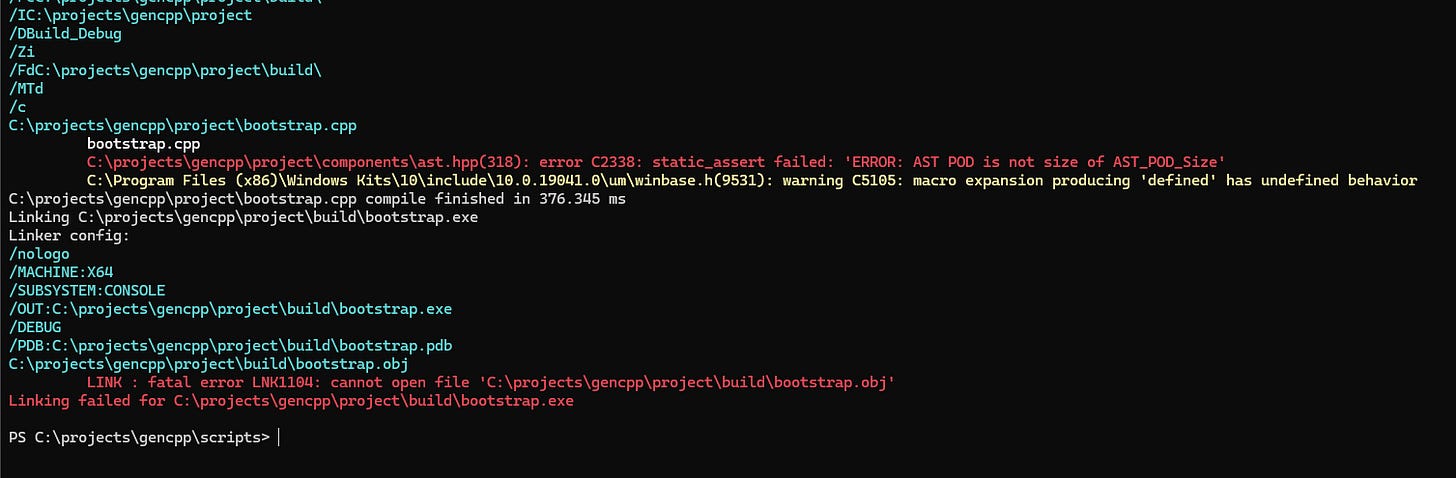

I’m going to purposefully cause a compile error to now show an issue with clang vs msvc with powershell:

vs MSVC:

The formatting and colorization specified in the run-<tool> helper functions failed.

We can fix this with the -fno-color-diagnostics flag in the compiler arguments and modifying the helper function in the following way:

The 2>&1 will combine the standard out & error-out into one buffer, which will absolve it not getting processed if also using the no-color option.

Some generalization

While making enough of these scripts you’ll most likely find patterns just like with regular code where you can safely generalize.

For gencpp’s build script I changed the layout to the following after I got it working for the bootstrap, single-header, and testing build targets:

Import script helpers :

devshell.ps1, target_arch.psm1( Used for clang to get the target triplet automatically )Process arguments : Any dynamics for the build process ( debug builds, which compile if multiple supported, selectively build only specific aspects, etc )

Resolve toolchain configuration

Setup environment with all external paths that can be resolved with minimal hard-coding.

Set the compiler and linker based on default or user overrides.

Setup all flags relevant to the project for the compiler, linker, archiver, all the tools.

Define any functions that abstract the build pipeline. Do so only if the project’s build targets, or its build structure, is simple or consistent.

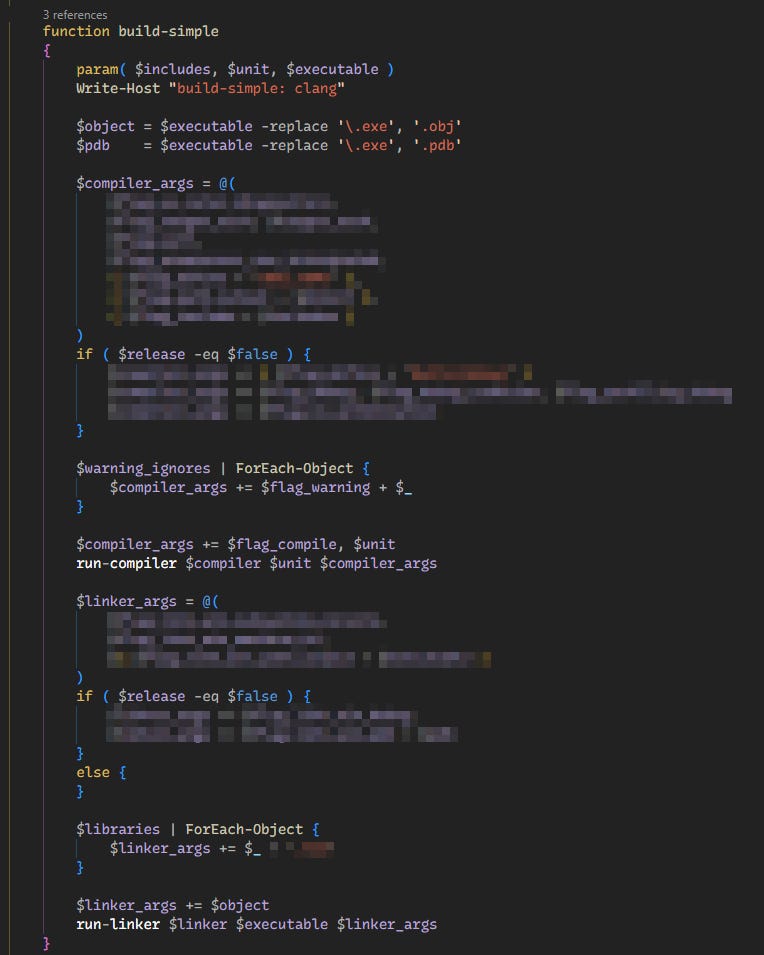

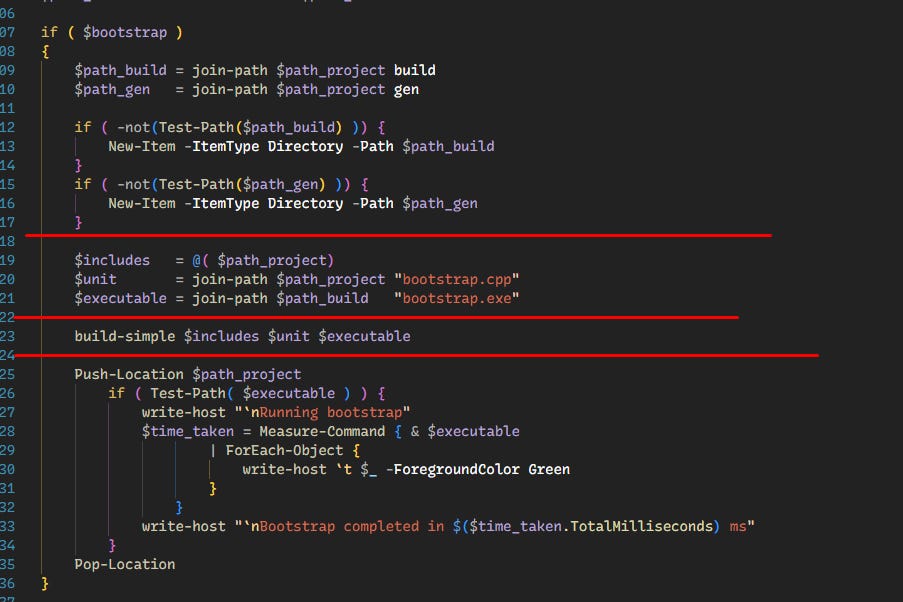

In gencpp’s case the entire build configuration could be absolved to a single function calledbuild-simple:This can be done due to all targets using single compilation unit pattern, AKA Unity Builds.

Building. For each target:

Resolve any context specific paths and create missing paths

Set the includes, translation unit file, and binary file

Run build-simple

Generate code for that target

Example for the bootstrap target:

Ending thoughts

You could say this script behaves as a build system. Well yes, if you look at what scons and meson are, its using the python scripting language to do the same thing, but bloated to handle every edge case for every single project layout under the sun.

MSBuild is no different. It’s a dedicated application that organizes a codebase into a solution. The solution may have multiple projects, and they each have their own project properties that outline how MSVC should compile its translation units with cl and link with link.

There are possible alternatives. You could create your own version of clang and lld-link to allow the user to pass a build configuration, and then you can just specify everything you need upfront. However the clang library is not something easy wrangle with.

Microsoft doesn’t open source cl or link so there is no luck there.

The cuik compiler is making a flexible API to its toolchain.

Hopefully we’ll see better tools in a decade..